As artificial intelligence models continue to grow in size and capability, a fundamental challenge has emerged: how to scale model capacity without proportionally increasing computational costs. Enter Mixture of Experts (MoE), an architectural pattern that has transformed from a theoretical concept into the backbone of today’s most powerful language models. Since early 2025, nearly all leading frontier models use MoE designs, including groundbreaking systems like GPT-4, DeepSeek-V3, and Mixtral.

The concept isn’t entirely new. MoE traces its origins to a 1991 paper titled “Adaptive Mixture of Local Experts,” but recent advances in hardware and training techniques have finally made it practical for large-scale deployment. Today, the top 10 most intelligent open-source models all use a mixture-of-experts architecture, signaling a fundamental shift in how we build AI systems.

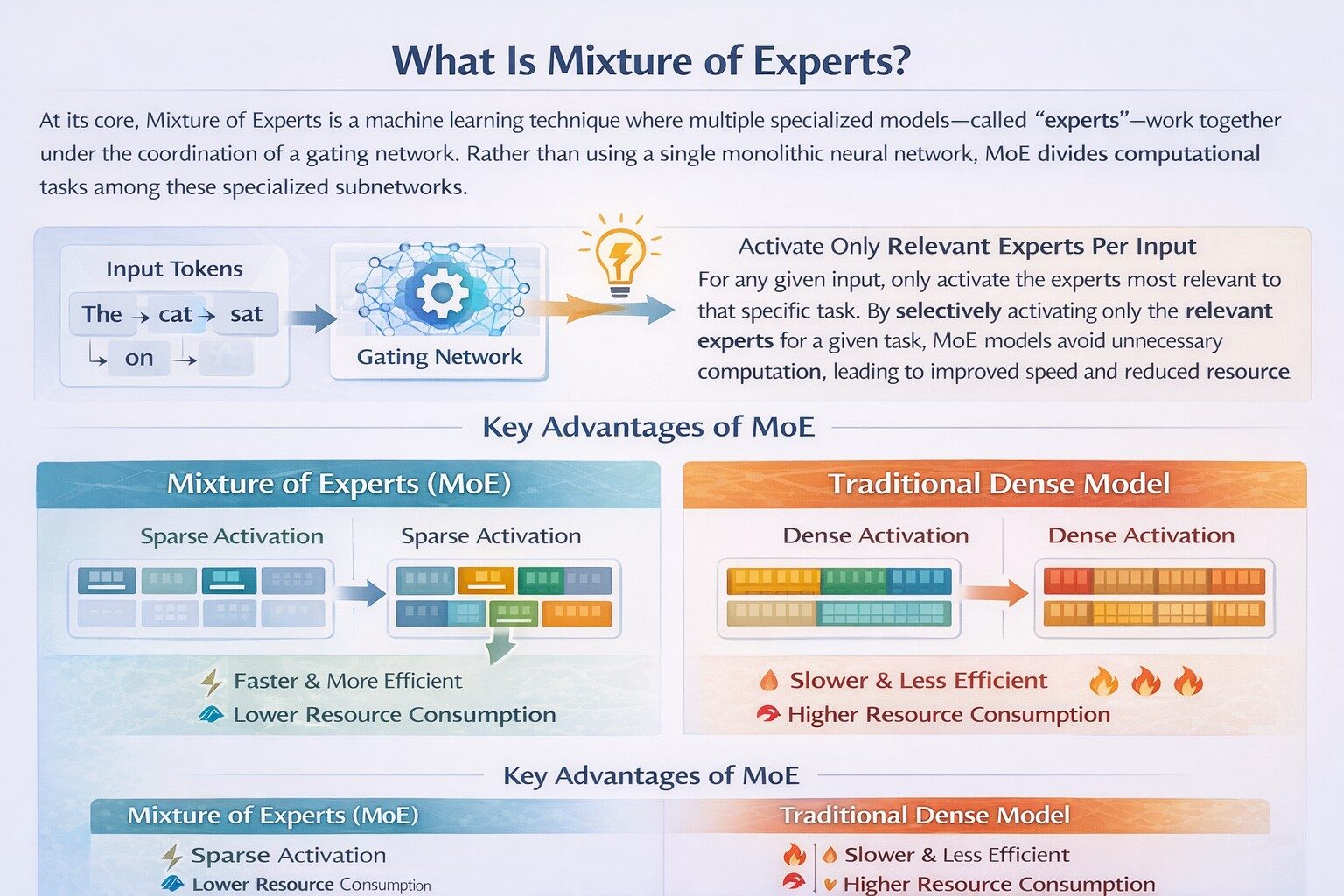

What Is Mixture of Experts?

At its core, Mixture of Experts is a machine learning technique where multiple specialized models—called “experts”—work together under the coordination of a gating network. Rather than using a single monolithic neural network, MoE divides computational tasks among these specialized subnetworks.

The architecture operates on a simple but powerful principle: for any given input, only activate the experts most relevant to that specific task. By selectively activating only the relevant experts for a given task, MoE models avoid unnecessary computation, leading to improved speed and reduced resource consumption. This sparse activation is what makes MoE fundamentally different from traditional dense models.

The Core Components

Every MoE system consists of three essential elements:

Experts: These are specialized neural networks, each designed to handle specific aspects of the input data. In modern large language models, experts are typically independent feedforward networks that replace the standard FFN layers in transformer architectures. An “expert” is not specialized in a specific domain like “Psychology” or “Biology”—at most, it learns syntactic information on a word level, with expertise in handling specific tokens in specific contexts.

Router (Gating Network): This component acts as a traffic controller, analyzing each input and determining which experts should process it. The router calculates scores for each expert and selects the top-k most appropriate ones. The router does no special tricks for calculating which expert to choose and takes the softmax of the input multiplied by the expert’s weights.

Aggregation Mechanism: After the selected experts process the input, their outputs must be combined into a single result. This is typically achieved through weighted averaging, where the weights reflect the probabilities assigned by the gating network.

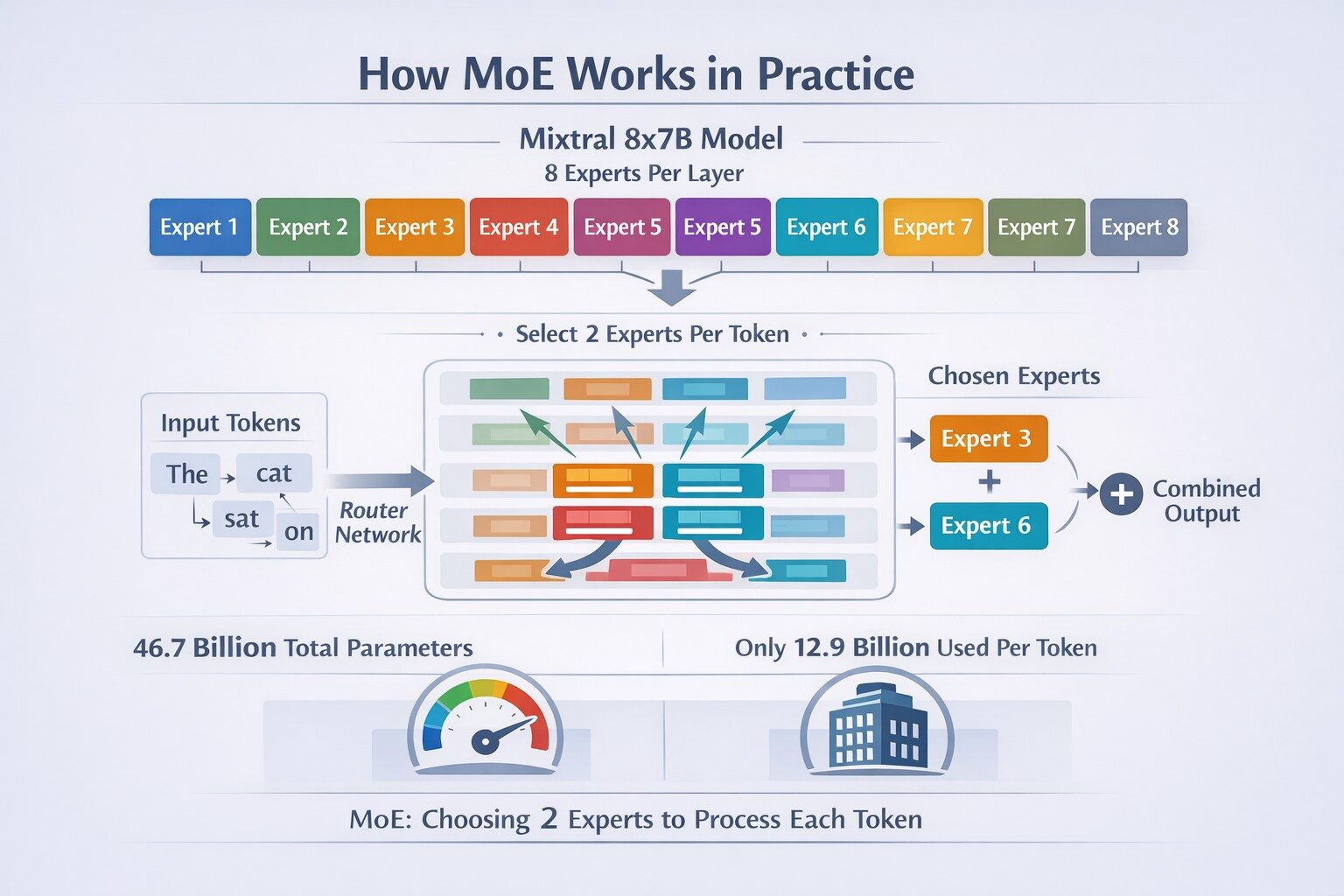

How MoE Works in Practice

To understand MoE in action, consider a concrete example. The Mixtral 8x7B model, one of the most successful open-source implementations, has eight experts in each layer. At every layer, for every token, a router network chooses two of these groups (the “experts”) to process the token and combine their output additively.

Despite having 46.7B total parameters, Mixtral uses only 12.9B parameters per token, processing input and generating output at the speed of a much smaller model while maintaining the capacity of a much larger one. This efficiency makes MoE models particularly attractive for real-world deployment.

The Sparse Activation Advantage

The key innovation lies in sparse activation—the principle that not all parameters need to be used for every computation. By sparsely activating a subset of parameters for each token, MoE architecture could increase the model size without sacrificing computational efficiency, achieving a better trade-off between performance and training costs.

Consider DeepSeek-R1, which exemplifies this approach at scale. The model packs a whopping 671 billion parameters, but thanks to MoE, it only activates approximately 37 billion of them for any given input. This means the model maintains computational efficiency comparable to a 37B parameter model while leveraging the knowledge and capacity of a 671B parameter system.

Architectural Innovations in Modern MoE

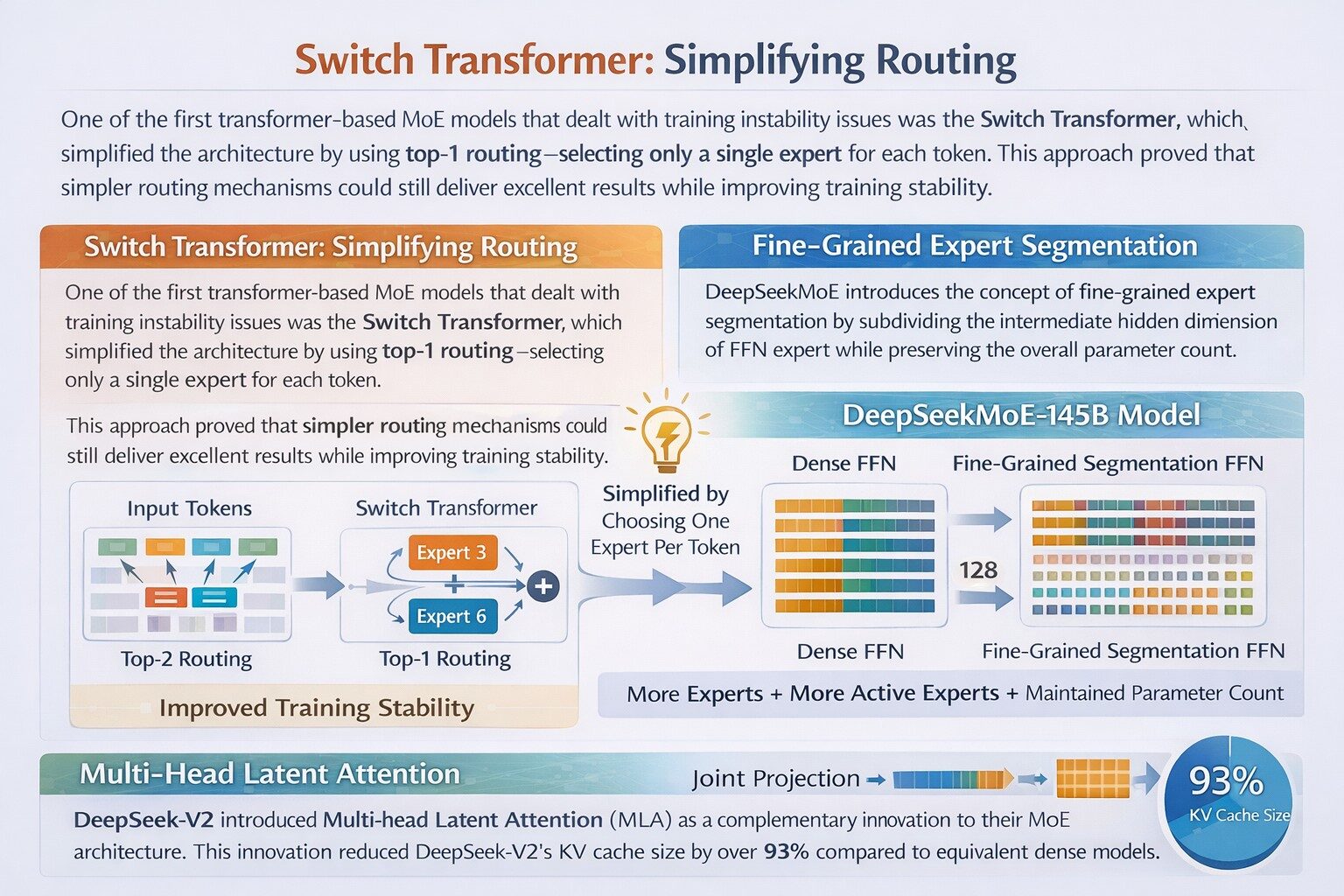

Switch Transformer: Simplifying Routing

One of the first transformer-based MoE models that dealt with training instability issues was the Switch Transformer, which simplified the architecture by using top-1 routing—selecting only a single expert for each token. This approach proved that simpler routing mechanisms could still deliver excellent results while improving training stability.

Fine-Grained Expert Segmentation

DeepSeek introduced a revolutionary concept with their DeepSeekMoE architecture. DeepSeekMoE introduces the concept of fine-grained expert segmentation by subdividing the intermediate hidden dimension of FFN expert while preserving the overall parameter count.

The DeepSeekMoE-145B model demonstrates this approach at scale, employing a reduced intermediate hidden dimension at one-eighth that of its dense FFN counterpart, increasing both the number of experts (from 16 to 128) and the number of active experts (from top-2 to top-16) by a factor of eight. This strategy enables more precise knowledge decomposition and enhanced flexibility in expert activation combinations.

Multi-Head Latent Attention

DeepSeek-V2 introduced Multi-head Latent Attention (MLA) as a complementary innovation to their MoE architecture. MLA aims to minimize memory consumed by the model’s KV cache through a low-rank joint projection that allows representation of all key and value vectors with a much smaller latent vector. This innovation reduced DeepSeek-V2’s KV cache size by over 93% compared to equivalent dense models.

The Challenge of Load Balancing

One of the most significant challenges in MoE training is load balancing—ensuring that computational work is distributed evenly across experts and hardware.

The Load Imbalance Problem

MoEs are prone to routing collapses where a model can continuously select the same set of experts during training, thereby hindering the learning process of the others. When experts are distributed across different training nodes, load imbalance can create computational bottlenecks that severely impact training efficiency.

Traditional Solutions: Auxiliary Loss

A commonly accepted solution to tackle this problem is an auxiliary loss, a regularization term that improves load-balancing but interferes with training. The challenge lies in finding the right balance: too small a coefficient causes insufficient load balancing, while a larger value impairs model performance.

The DeepSeek-V3 Innovation

DeepSeek-V3 introduced an auxiliary-loss-free approach that represents a significant breakthrough. The system separates expert selection from expertise weighting, making these processes independent. A bias term is added to the affinity scores to balance utilization—the new selection score diminishes gaps between experts, meaning for new inputs, the distribution of workload is more equal.

Importantly, this bias impacts only expert selection. The gating value is still derived using original affinity scores, ensuring that each expert’s expertise is respected while achieving better load distribution.

Real-World Performance and Applications

Breakthrough Models

The success of MoE is evident in the performance of recent models:

DeepSeek-V3: With 671 billion parameters and only 37 billion activated per token, this model required just 2.788 million H800 GPU hours to train, translating to approximately $5.6 million. This represents a dramatic reduction compared to the estimated $50-100 million training cost for GPT-4.

Mixtral 8x7B: Despite using only 12.9B active parameters, this model demonstrates performance superior or equivalent to much larger dense models like Llama-2-70B and GPT-3.5 across various benchmarks.

OLMoE: This 7B parameter model uses only 1B active parameters per token, demonstrating that MoE can enable powerful on-device AI applications with minimal computational overhead.

Hardware Optimization

Recent hardware developments have specifically targeted MoE deployment. The NVIDIA GB200 NVL72 system enables MoE models like Kimi K2 Thinking and DeepSeek-R1 to run 10x faster, delivering one-tenth the cost per token compared to previous generation hardware.

This performance leap transforms the economics of AI deployment. Because the system processes ten times as many tokens using the same time and power, the cost per individual token drops dramatically—making complex MoE models practical for everyday applications.

Applications Beyond Language Models

While MoE has found its greatest success in large language models, the architecture is expanding to other domains:

Computer Vision: Google’s V-MoEs architecture applies MoE to Vision Transformers, partitioning images into patches and dynamically selecting appropriate experts for each patch. This optimizes both accuracy and efficiency, with the flexibility to decrease selected experts per token without additional training.

Recommendation Systems: Google researchers have proposed Multi-Gate Mixture of Experts systems for YouTube video recommendations, demonstrating MoE’s effectiveness in handling diverse user preferences and content types.

Translation Systems: Microsoft’s Z-code translation API uses MoE to support massive model parameter scales while maintaining constant computational costs, enabling high-quality multilingual translation.

Challenges and Limitations

Despite its advantages, MoE presents several challenges:

Training Complexity

MoE models have more hyperparameters than traditional models, including the number of experts, router architecture, and load balancing coefficients. Tuning these parameters can be time-consuming and requires careful experimentation.

Inference Overhead

Although MoE reduces computation per token, inference can face bottlenecks. The gating network must run for each input, and selecting and activating experts adds overhead. Running multiple experts in parallel requires advanced scheduling and resource management.

Memory Requirements

MoE models tend to be larger than single models due to multiple experts, increasing overall storage requirements. While only a subset is active at any time, the entire model must still be loaded into memory.

Expert Specialization

Recent research reveals intriguing findings about expert behavior. Neurons act like fine-grained experts, the router usually selects experts with larger output norms, and expert diversity increases as layers deepen. Understanding and leveraging these patterns remains an active area of research.

The Future of MoE

The trajectory of MoE development points toward several exciting directions:

Adaptive Architectures

Future MoE systems may employ adaptive routing that adjusts based on context and task requirements, potentially incorporating hierarchical expert organization and dynamic expert creation.

Agentic Systems

MoE’s core principle mirrors the structure of agentic AI systems, where different agents specialize in planning, perception, reasoning, and tool use. Extending this to production environments could enable shared expert pools accessible to multiple applications, with requests routed to appropriate experts on demand.

Efficiency Gains

Continued optimization of hardware and software for MoE will likely deliver further improvements in cost and performance. The combination of specialized chips, improved routing algorithms, and better load balancing strategies will make MoE even more practical for deployment.

Democratization

All major recent MoE models are fully open-source, indicating a trend in 2024-2025 to democratize MoE research. This openness enables practitioners worldwide to study, build upon, and innovate with these architectures.

Conclusion

Mixture of Experts represents a fundamental shift in how we approach model scaling. By enabling sparse activation of massive parameter spaces, MoE has made it possible to build models with unprecedented capacity while maintaining computational efficiency. The ability to scale model capacity efficiently while maintaining minimal computational overhead has made MoE essential for maximizing effectiveness.

From GPT-4’s reported MoE architecture to DeepSeek’s efficient training at a fraction of traditional costs, from Mixtral’s open-source success to the hardware optimizations enabling 10x performance improvements, MoE has proven itself as the architecture of choice for frontier AI models.

As we continue to push the boundaries of artificial intelligence, MoE will undoubtedly play a central role in making powerful AI systems both more capable and more accessible. The combination of increased model capacity, computational efficiency, and cost-effectiveness positions MoE as a cornerstone technology for the next generation of AI applications.

The revolution is not just in making models bigger—it’s in making them smarter about which parts to use, when to use them, and how to coordinate their collective intelligence. That’s the true promise of Mixture of Experts.